|

|

@@ -14,27 +14,44 @@

|

|

|

year = {1980},

|

|

|

}

|

|

|

|

|

|

+@Book{delfs_knebl,

|

|

|

+ title = "Introduction to cryptography",

|

|

|

+ author = "Delfs, Hans and Knebl, Helmut",

|

|

|

+ publisher = "Springer",

|

|

|

+ series = "Information Security and Cryptography",

|

|

|

+ date = {2007-03},

|

|

|

+ note = {{ISBN:} 978-3-540-49243-6},

|

|

|

+ address = "Berlin, Germany",

|

|

|

+ language = "en"

|

|

|

+}

|

|

|

+

|

|

|

@TechReport{rfcgzip,

|

|

|

author = {L. Peter Deutsch and Jean-Loup Gailly and Mark Adler and L. Peter Deutsch and Glenn Randers-Pehrson},

|

|

|

date = {1996-05},

|

|

|

- title = {GZIP file format specification version 4.3},

|

|

|

+ title = {{GZIP} file format specification version 4.3},

|

|

|

+ doi = {10.17487/rfc1952},

|

|

|

number = {1952},

|

|

|

type = {RFC},

|

|

|

+ url = {https://www.rfc-editor.org/rfc/rfc1952},

|

|

|

howpublished = {Internet Requests for Comments},

|

|

|

issn = {2070-1721},

|

|

|

month = {May},

|

|

|

- publisher = {RFC},

|

|

|

+ publisher = {{RFC} Editor},

|

|

|

year = {1996},

|

|

|

}

|

|

|

|

|

|

@TechReport{rfcansi,

|

|

|

- author = {K. Simonsen and},

|

|

|

+ author = {K. Simonsen},

|

|

|

+ date = {1992-06},

|

|

|

title = {Character Mnemonics and Character Sets},

|

|

|

+ doi = {10.17487/rfc1345},

|

|

|

number = {1345},

|

|

|

type = {RFC},

|

|

|

+ url = {https://www.rfc-editor.org/rfc/rfc1345},

|

|

|

howpublished = {Internet Requests for Comments},

|

|

|

issn = {2070-1721},

|

|

|

month = {June},

|

|

|

+ publisher = {{RFC} Editor},

|

|

|

year = {1992},

|

|

|

}

|

|

|

|

|

|

@@ -48,23 +65,6 @@

|

|

|

year = {2019},

|

|

|

}

|

|

|

|

|

|

-@TechReport{iso-ascii,

|

|

|

- author = {ISO/IEC JTC 1/SC 2 Coded character sets},

|

|

|

- date = {1998-04},

|

|

|

- institution = {International Organization for Standardization},

|

|

|

- title = {Information technology — 8-bit single-byte coded graphic character sets — Part 1: Latin alphabet No. 1},

|

|

|

- type = {Standard},

|

|

|

- address = {Geneva, CH},

|

|

|

- key = {ISO8859-1:1998},

|

|

|

- volume = {1998},

|

|

|

- year = {1998},

|

|

|

-}

|

|

|

-

|

|

|

-@TechReport{isoutf,

|

|

|

- author = {ISO},

|

|

|

- title = {ISO/IEC 10646:2020 UTF},

|

|

|

-}

|

|

|

-

|

|

|

@Article{ju_21,

|

|

|

author = {Philomin Juliana and Ravi Prakash Singh and Jesse Poland and Sandesh Shrestha and Julio Huerta-Espino and Velu Govindan and Suchismita Mondal and Leonardo Abdiel Crespo-Herrera and Uttam Kumar and Arun Kumar Joshi and Thomas Payne and Pradeep Kumar Bhati and Vipin Tomar and Franjel Consolacion and Jaime Amador Campos Serna},

|

|

|

date = {2021-03},

|

|

|

@@ -134,7 +134,7 @@

|

|

|

}

|

|

|

|

|

|

@Article{dna_structure,

|

|

|

- author = {J. D. WATSON and F. H. C. CRICK},

|

|

|

+ author = {J. Watson and F. Crick},

|

|

|

date = {1953-04},

|

|

|

journaltitle = {Nature},

|

|

|

title = {Molecular Structure of Nucleic Acids: A Structure for Deoxyribose Nucleic Acid},

|

|

|

@@ -199,18 +199,8 @@

|

|

|

publisher = {Springer Science and Business Media {LLC}},

|

|

|

}

|

|

|

|

|

|

-@Book{delfs_knebl,

|

|

|

- author = {Delfs, Hans and Knebl, Helmut},

|

|

|

- date = {2007},

|

|

|

- title = {Introduction to Cryptography},

|

|

|

- isbn = {9783540492436},

|

|

|

- pages = {368},

|

|

|

- publisher = {Springer},

|

|

|

- subtitle = {Principles and Applications (Information Security and Cryptography)},

|

|

|

-}

|

|

|

-

|

|

|

@Article{cc14,

|

|

|

- author = {Kashfia Sailunaz and Mohammed Rokibul Alam Kotwal and Mohammad Nurul Huda},

|

|

|

+ author = {Kashfia Sailunaz and Mohammed Kotwal and Mohammad Huda},

|

|

|

date = {2014-03},

|

|

|

journaltitle = {International Journal of Computer Applications},

|

|

|

title = {Data Compression Considering Text Files},

|

|

|

@@ -234,22 +224,26 @@

|

|

|

}

|

|

|

|

|

|

@Article{huf52,

|

|

|

- author = {Huffman, David A.},

|

|

|

- title = {A Method for the Construction of Minimum-Redundancy Codes},

|

|

|

- number = {9},

|

|

|

- pages = {1098-1101},

|

|

|

- volume = {40},

|

|

|

- added-at = {2009-01-14T00:43:43.000+0100},

|

|

|

- biburl = {https://www.bibsonomy.org/bibtex/2585b817b85d7278b868329672ddded96/dret},

|

|

|

- description = {dret'd bibliography},

|

|

|

- interhash = {d00a180c1c2e7851560c2d51e0fd8f92},

|

|

|

- intrahash = {585b817b85d7278b868329672ddded96},

|

|

|

- journal = {Proceedings of the Institute of Radio Engineers},

|

|

|

- keywords = {imported},

|

|

|

- month = {September},

|

|

|

- timestamp = {2009-01-14T00:43:44.000+0100},

|

|

|

- uri = {http://compression.graphicon.ru/download/articles/huff/huffman_1952_minimum-redundancy-codes.pdf},

|

|

|

- year = {1952},

|

|

|

+ author = {David Huffman},

|

|

|

+ date = {1952-09},

|

|

|

+ journaltitle = {Proceedings of the {IRE}},

|

|

|

+ title = {A Method for the Construction of Minimum-Redundancy Codes},

|

|

|

+ doi = {10.1109/jrproc.1952.273898},

|

|

|

+ number = {9},

|

|

|

+ pages = {1098--1101},

|

|

|

+ volume = {40},

|

|

|

+ added-at = {2009-01-14T00:43:43.000+0100},

|

|

|

+ biburl = {https://www.bibsonomy.org/bibtex/2585b817b85d7278b868329672ddded96/dret},

|

|

|

+ description = {dret'd bibliography},

|

|

|

+ interhash = {d00a180c1c2e7851560c2d51e0fd8f92},

|

|

|

+ intrahash = {585b817b85d7278b868329672ddded96},

|

|

|

+ journal = {Proceedings of the Institute of Radio Engineers},

|

|

|

+ keywords = {imported},

|

|

|

+ month = {September},

|

|

|

+ publisher = {Institute of Electrical and Electronics Engineers ({IEEE})},

|

|

|

+ timestamp = {2009-01-14T00:43:44.000+0100},

|

|

|

+ uri = {http://compression.graphicon.ru/download/articles/huff/huffman_1952_minimum-redundancy-codes.pdf},

|

|

|

+ year = {1952},

|

|

|

}

|

|

|

|

|

|

@Article{moffat20,

|

|

|

@@ -406,11 +400,10 @@

|

|

|

publisher = {Springer Science and Business Media {LLC}},

|

|

|

}

|

|

|

|

|

|

-

|

|

|

@Book{cthreading,

|

|

|

author = {Quinn, Michael J.},

|

|

|

title = {Parallel Programming in C with MPI and OpenMP},

|

|

|

- isbn = {0071232656},

|

|

|

+ note = {{ISBN:} 0071232656},

|

|

|

publisher = {McGraw-Hill Education Group},

|

|

|

year = {2003},

|

|

|

}

|

|

|

@@ -429,7 +422,7 @@

|

|

|

author = {McIntosh, Colin},

|

|

|

date = {2013},

|

|

|

title = {Cambridge International Dictionary of English},

|

|

|

- isbn = {9781107035157},

|

|

|

+ note = {{ISBN:} 9781107035157},

|

|

|

pages = {1856},

|

|

|

publisher = {Cambridge University Press},

|

|

|

}

|

|

|

@@ -448,89 +441,111 @@

|

|

|

|

|

|

|

|

|

@Online{bam,

|

|

|

- title = {Sequence Alignment/Map Format Specification},

|

|

|

+ title = {{Sequence Alignment/Map Format Specification}},

|

|

|

url = {https://github.com/samtools/hts-specs},

|

|

|

urldate = {2022-09-12},

|

|

|

}

|

|

|

|

|

|

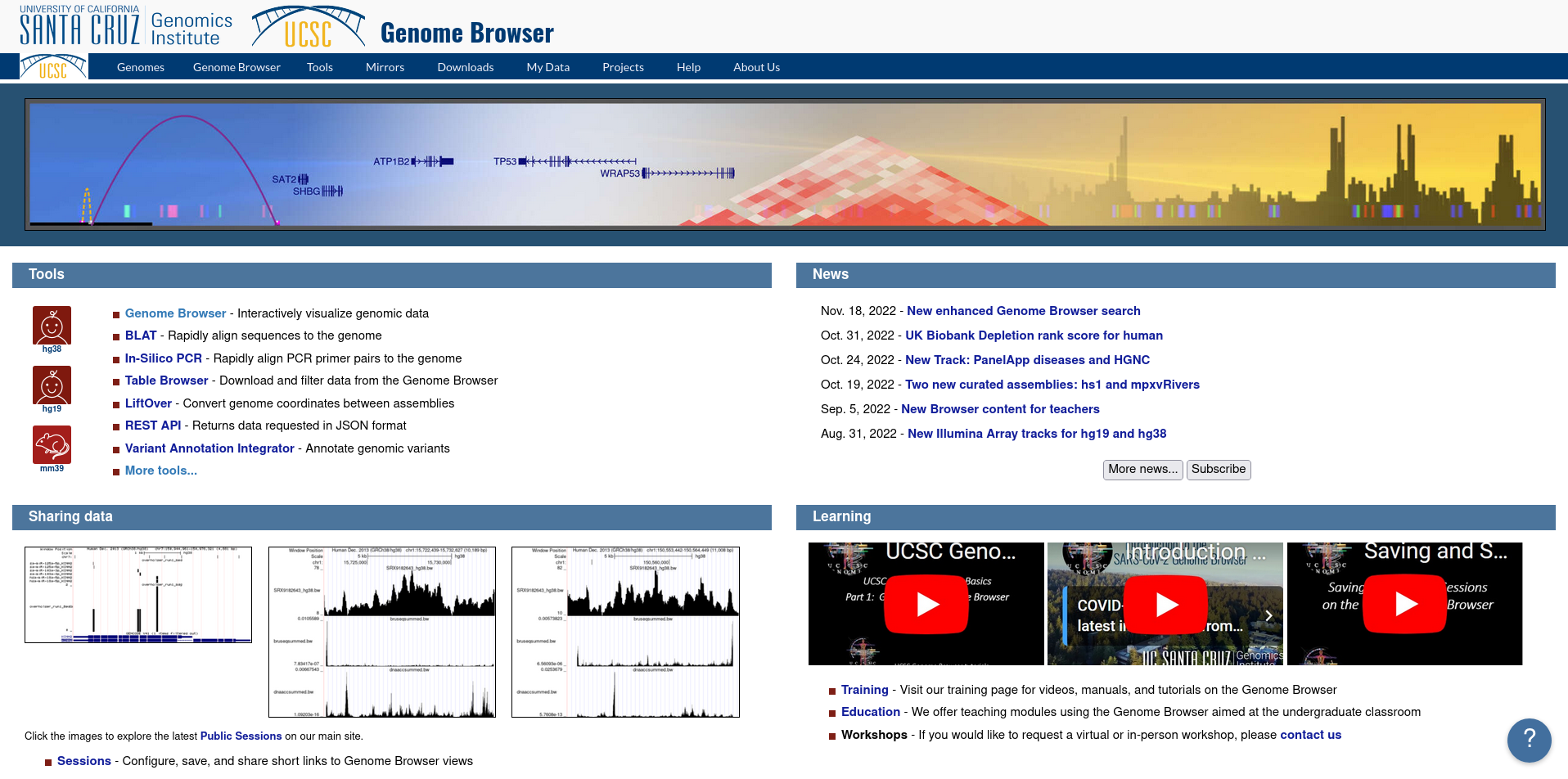

@Online{ucsc,

|

|

|

- title = {UCSC Genome Browser},

|

|

|

+ title = {{UCSC University of California Santa Cruz - Genome Browser}},

|

|

|

url = {https://genome.ucsc.edu/},

|

|

|

urldate = {2022-10-28},

|

|

|

}

|

|

|

|

|

|

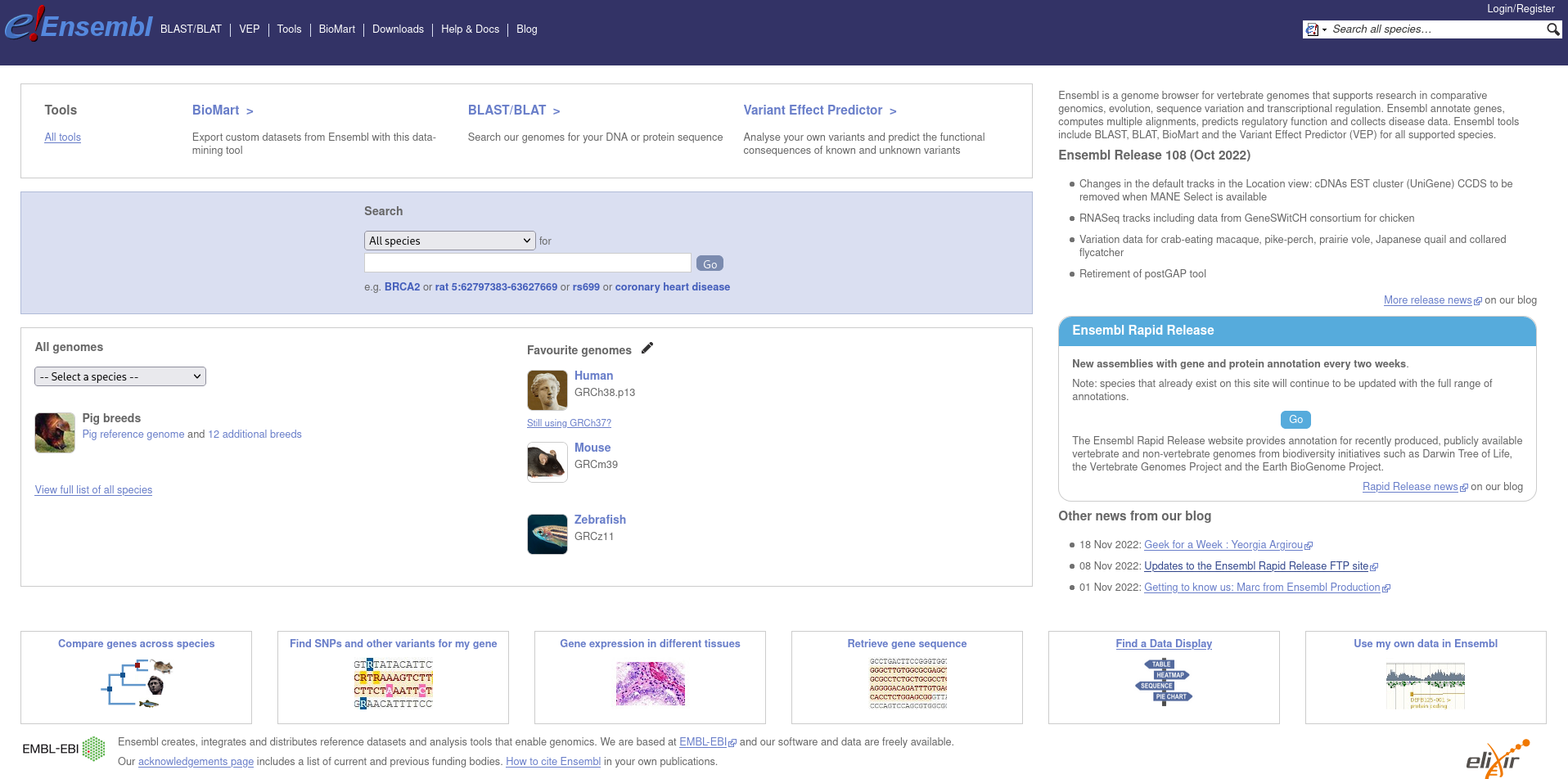

@Online{ensembl,

|

|

|

- title = {ENSEMBL Project},

|

|

|

+ title = {{The Ensembl Project}},

|

|

|

url = {http://www.ensembl.org/},

|

|

|

urldate = {2022-10-24},

|

|

|

}

|

|

|

|

|

|

-@Online{ga4gh,

|

|

|

- title = {Global Alliance for Genomics and Health},

|

|

|

- url = {https://github.com/samtools/hts-specs.},

|

|

|

- urldate = {2022-10-04},

|

|

|

-}

|

|

|

-

|

|

|

@Online{bed,

|

|

|

- title = {BED Browser Extensible Data},

|

|

|

+ title = {{BED -- Browser Extensible Data}},

|

|

|

url = {https://samtools.github.io/hts-specs/BEDv1.pdf},

|

|

|

urldate = {2022-10-20},

|

|

|

}

|

|

|

|

|

|

@Online{illufastq,

|

|

|

- title = {Illumina FASTq file structure explained},

|

|

|

+ title = {{Illumina FASTq file structure explained}},

|

|

|

url = {https://support.illumina.com/bulletins/2016/04/fastq-files-explained.html},

|

|

|

urldate = {2022-11-17},

|

|

|

}

|

|

|

|

|

|

@Online{twobit,

|

|

|

- editor = {UCSC University of California Sata Cruz},

|

|

|

+ editor = {{UCSC -- University of California Sata Cruz}},

|

|

|

title = {TwoBit File Format},

|

|

|

url = {https://genome-source.gi.ucsc.edu/gitlist/kent.git/raw/master/src/inc/twoBit.h},

|

|

|

urldate = {2022-09-22},

|

|

|

}

|

|

|

|

|

|

@Online{ftp-igsr,

|

|

|

- title = {IGSR: The International Genome Sample Resource},

|

|

|

+ title = {{IGSR -- The International Genome Sample Resource}},

|

|

|

url = {https://ftp.1000genomes.ebi.ac.uk},

|

|

|

urldate = {2022-11-10},

|

|

|

}

|

|

|

|

|

|

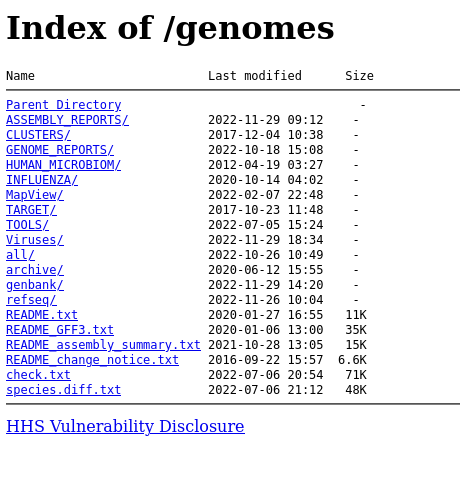

@Online{ftp-ncbi,

|

|

|

- title = {NCBI National Center for Biotechnology Information},

|

|

|

+ title = {{NCBI -- National Center for Biotechnology Information}},

|

|

|

url = {https://ftp.ncbi.nlm.nih.gov/genomes/},

|

|

|

urldate = {2022-11-01},

|

|

|

}

|

|

|

|

|

|

@Online{ftp-ensembl,

|

|

|

- title = {ENSEMBL Rapid Release},

|

|

|

+ title = {{Ensembl FTP-Server}},

|

|

|

url = {https://ftp.ensembl.org},

|

|

|

urldate = {2022-10-15},

|

|

|

}

|

|

|

|

|

|

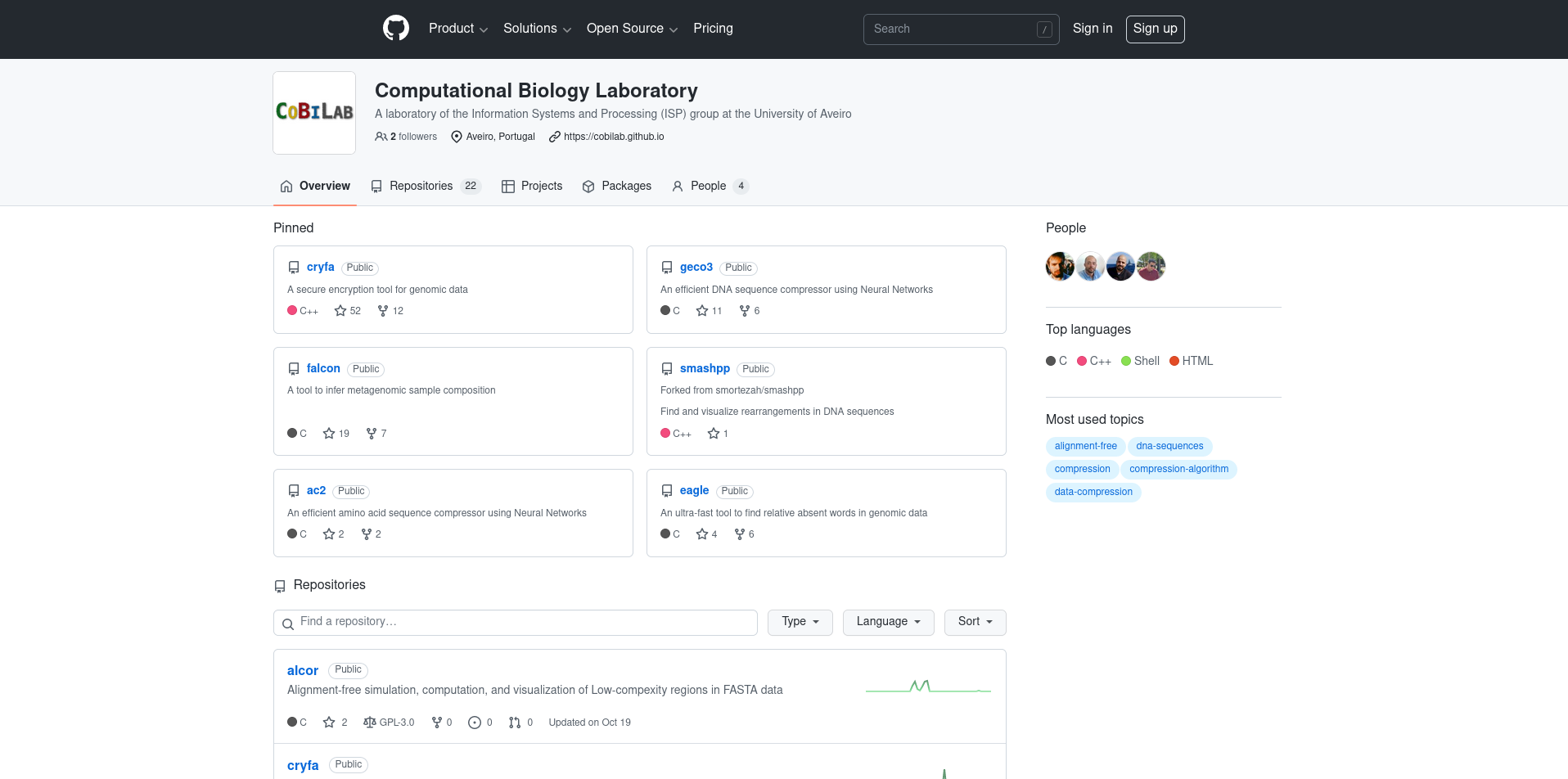

@Online{geco-repo,

|

|

|

- title = {Repositories for the three versions of GeCo},

|

|

|

+ title = {{Repositories for the three versions of GeCo}},

|

|

|

url = {https://github.com/cobilab},

|

|

|

urldate = {2022-11-19},

|

|

|

}

|

|

|

|

|

|

@Online{code-analysis,

|

|

|

editor = {Kirsten, S and Nick Bloor and Sarah Baso and James Bowie and Evgeniy Ryzhkov and Iberiam and Ann Campbell and Jonathan Marcil and Christina Schelin and Jie Wang and Fabian and Achim and Dirk Wetter},

|

|

|

- title = {Static Code Analysis},

|

|

|

+ title = {{Static Code Analysis definition}},

|

|

|

url = {https://owasp.org/www-community/controls/Static_Code_Analysis},

|

|

|

urldate = {2022-11-20},

|

|

|

}

|

|

|

|

|

|

@Online{gpl,

|

|

|

- title = {GNU Public License},

|

|

|

+ title = {{GPL - GNU Public License description}},

|

|

|

url = {http://www.gnu.org/licenses/gpl-3.0.html},

|

|

|

urldate = {2022-11-20},

|

|

|

}

|

|

|

|

|

|

@Online{mitlic,

|

|

|

- title = {MIT License},

|

|

|

+ title = {{MIT license description}},

|

|

|

url = {https://spdx.org/licenses/MIT.html},

|

|

|

urldate = {2022-11-23}

|

|

|

}

|

|

|

|

|

|

+@TechReport{rfchttp,

|

|

|

+ author = {R. Fielding and J. Gettys and J. Mogul and H. Frystyk and L. Masinter and P. Leach and T. Berners-Lee},

|

|

|

+ date = {1999-06},

|

|

|

+ title = {Hypertext Transfer Protocol -- {HTTP}/1.1},

|

|

|

+ doi = {10.17487/rfc2616},

|

|

|

+ url = {https://www.rfc-editor.org/rfc/rfc2616},

|

|

|

+ publisher = {{RFC} Editor},

|

|

|

+}

|

|

|

+

|

|

|

+@TechReport{iso-ascii,

|

|

|

+ author = {{ISO/IEC} 8859-1:1998},

|

|

|

+ date = {2020-12},

|

|

|

+ institution = {International Organization for Standardization, Geneva, Switzerland.},

|

|

|

+ title = {Information technology — 8-bit single-byte coded graphic character sets — Part 1: Latin alphabet No. 1},

|

|

|

+ type = {Standard},

|

|

|

+ url = {https://www.iso.org/standard/28245.html},

|

|

|

+ year = {1998},

|

|

|

+}

|

|

|

+

|

|

|

+@TechReport{isoutf,

|

|

|

+ author = {{ISO/IEC} 10646:2020},

|

|

|

+ date = {2020-12},

|

|

|

+ institution = {International Organization for Standardization, Geneva, Switzerland.},

|

|

|

+ title = {Information technology — Universal coded character set {UCS}},

|

|

|

+ url = {https://www.iso.org/standard/76835.html},

|

|

|

+ year = {1991},

|

|

|

+}

|

|

|

+

|

|

|

@Comment{jabref-meta: databaseType:biblatex;}

|